Every so often for a project, you need to do some web-scraping. For those of us that see this as a once-in-a-while task, we may be painfully reminded of the annoying convolution of BeautifulSoup, maybe Selenium, and maybe Chromedriver.

For traditional approaches, you need to understand the specific structure of various websites in order to get the exact information you care about. If the website changes format, all that work is gone, and you may need to start over. And for any serious website, there are security barriers, request limitations, etc, making you want to avoid the day you next need to scrape the web.

As someone who spent the last five years in deep learning, and knows very little about traditional software engineering and front-end dev, there seemed to be a silly possibility of using new SOTA Vision Language Models (VLMs) like GPT-4o to help us with web-scraping.

So I prototyped it in a few hours, gave it a try, and it kind of worked. I'm sure there may be better applications where this is more useful, but in this case of scraping NYT articles, it helped me bypass login/anti-scraping security rules, so I appreciated it!

Stage 1: Scrape Web Images with Chromedriver

The first stage uses Chromedriver to pull up a browser, and scrolls through a website while extracting minimally-overlapping screenshots, to capture the entire page. These screenshots are saved to a directory. Using chromedriver ensures that even dynamic content loaded via JavaScript is captured, providing a complete visual record of the webpage.

A pretty big advantage here is that using Chromedriver with a Google Chrome profile with the correct login permissions will allow you to browse and scrape websites that may otherwise have burdensome permission blocks.

Stage 2: OCR with GPT-4o VLM

In the second stage, the saved screenshots are sent to the GPT-4o API to extract text. To speed this up, these are sent in parallel with ThreadPoolExecutor. Upon casual inspection, GPT-4o does a pretty good job of extracting relevant text, which is saved to a series of .txt files. I'd be surprised if GPT-4o doesn't have some sort of OCR tool.

Here, there is the potential for doing other sorts of webscraping analysis that would certainly be beyond traditional BeautifulSoup style scraping; for example, if we want to include descriptive captions for images in the article transcription, this is something you would need a VLM for.

Stage 3: Synthesize Full Transcript

The last stage simply takes a directory of .txt file chunks, sequentially concatenates them, and asks GPT-4o to synthesize them into a single .txt article verbatim. Modern LLMs should have no problem doing this relatively simple task. Be careful to ask for an exact synthesis, and make sure you have sufficient output tokens to capture the whole article.

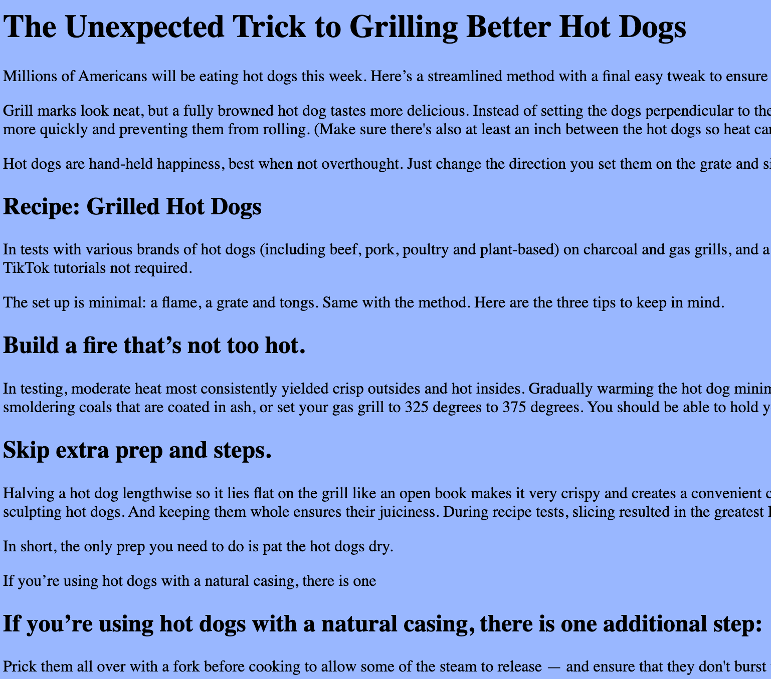

Below you can see part of the output .html file. Whether you want to include things like author, captions, can easily be modified by changing the scraping prompt.

Performance?

Here are some metrics calculated by GPT, on comparable chunks between the original NYT article and that created by this scraping protocol. If you're looking for perfection, this is lacking. But from a computer vision perspective, not too bad!

- Jaccard Similarity Index: Measures the similarity between the sets of tokens in the original and scraped texts. Value: 0.9295. Interpretation: High similarity between the two texts.

- Word-Level Accuracy: Measures the proportion of words in the original text that appear in the scraped text. Value: 0.9339. Interpretation: Approximately 93.39% of the words in the original text are in the scraped text.

- Character-Level Accuracy: Measures the similarity between the original and scraped texts based on character sequences. Value: 0.8848. Interpretation: The character sequences in the original and scraped texts are about 88.48% similar.

- Levenshtein Distance: Measures the number of single-character edits needed to change one text into the other. Value: 306. Interpretation: 306 single-character edits are needed to transform the original text into the scraped text.

Analysis and Thoughts

Overall, it feels funny and fun to explore this idea. Some initial thoughts:

- [-] This method is not cheap, basically infinitely more expensive than using requests or BeautifulSoup. Each screenshot you send for OCR to GPT-4o costs about 1c, which means an article can cost 10-20c. However, locally hosted models, or simpler OCR models (maybe Tesseract?) could decrease this cost to almost 0

- [-] This method is also pretty slow - even with chromedriver taking milliseconds per screenshot, and parallel GPT-4o calls, it still takes 10+ seconds to transcribe an article

- [-] As we're using computer vision, this method will not have perfect performance

- [+] Inspired by security/permissions challenges, I did find this method allowed me to finally allow me to scrape NYT articles

- [+] Can gain information from javascript, dynamically rendered content

- [+] If you connect this with more advanced chromedriver functionalities (i.e. clicking, moving between URLs) you can probably do more complex tasks. One example could be waiting for a reservation to open up, and sending you a message.

Let me know what you think at egoodman92@gmail.com. Still building up the repo to make it more robust. If there is a much easier way of scraping NYT articles, or you have another use case this may be interesting for, please let me know!

Check out the code!